Demo Exercise 2

Read all the documents in this lab script before starting to do/type anything. Ask if anything is unclear before doing something.

If you do something you need to know what effect it will have, otherwise you could damage the VM or ROS and be unable to work properly.

Tasks

In this exercise, you will implement a Convolutional Neural Network (CNN) to control the movement direction of a mobile robot platform in CoppeliaSim.

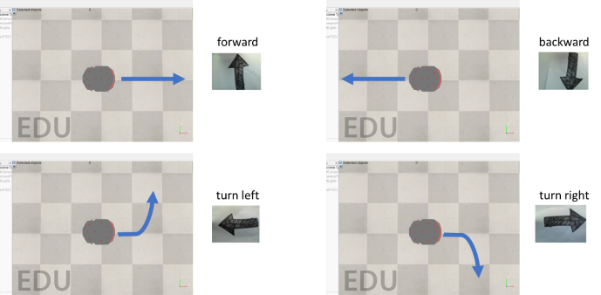

Your CNN will use data from a vision sensor to recognise 4 different robot actions () or classes:

- move forwards

- move backwards

- turn to the left

- turn to the right

illustrates an example scenario in CoppeliaSim, the input data and robot action expected for this exercise.

Complete the Tasks described below for Demo Exercise 2.

Task 1

Download from Moodle the ZIP file Database_arrows.zip, which contains the training and validation datasets of arrows (left, right, forward, downward directions).

Use this dataset to create 4 classes for recognition and control of the mobile robot in CoppeliaSim.

Download from Moodle the ZIP file Lab_files_cnn_arrows.zip, which contains the following files:

- CNN_arrows_training_template.py: template file with example code to load the database with arrows and their corresponding labels. You will use this file to create your CNN model, training and testing phases and save your model.

- CNN_arrows_recognition_real-time_ros_template.py: template file for recognition of arrows in real-time randomly selected from the test dataset. This file will send the recognition output to CoppeliaSim via ROS to control the movement direction of a mobile robot.

Task 2

Open the CNN_arrows_training_template.py file, study the example code provided to load the database and labels.

Create a CNN model for the recognition of 4 different arrow directions or classes.

Repeat the training and testing phases until your model achieves a recognition accuracy equal or greater than 85% and save your model. You will need to adjust the number of layers and parameters of the CNN to achieve the required accuracy.

Save the plots with the accuracy from both the training and testing processes and include them in your report.

Task 3

Open the CNN_arrows_recognition_real-time_ros_template.py file, add the code to load the database with arrows and your CNN model previously trained.

You will need to add the required code to randomly load arrows from the test dataset (do not use images from the training dataset), recognise the arrow direction and use the output to control the movement direction of a mobile robot in CoppeliaSim.

For visualisation purposes, allow the robot to move for some seconds before loading the next arrow. Load at least 3 images during the demo, each correctly identified.

Show the actual arrow loaded and the predicted arrow in a plot. Add the required ROS code to communicate between your program and CoppeliaSim to control the robot platform.

Notes

Save the accuracy plots from the training and testing processes and ROS graph and include them in your report. Record a 2-minute video of your PC monitor showing the arrow loaded, the recognition output and the robot moving according to the recognised arrow.