| Home | Research | Software | CV | Publications | Talks | Funding and Awards | Teaching and Supervision | Collaborators |

|

SoftwareI have more than seven years of experience in the implementation and optimisation of numerical methods on a wide range of high performance architectures in various programming languages (in particular C++, Fortran 90, Python) and in different parallel frameworks (MPI, OpenMP, CUDA). In this time I contributed to a wide range of software projects from handwritten research code, which I designed and developed from scratch, to large and operational industrial codes such as the Met Office Unified Model and the NAME dispersion model. I worked with state-of-the art numerical libraries for the solution of PDEs, such as the DUNE and Firedrake finite elment libraries and the hypre preconditioner collection. In the following I list some recent projects I contributed to. |

|

Independent projects

Performance portable geometric multigrid solvers in Firedrake

Languages: Python (and some C)

Mimetic finite element discretisations of the fundamental equations of fluid dynamics can improve the stability and accuracy of numerical forecast models. If an implicit time stepping method is used, a mixed formulation of the Helmholtz equations needs to be solved, which is more complicated than a simple finite difference discretisation on a structured grid. Geometric multigrid preconditioners provide an algorithmically scalable preconditioner for the pressure correction equations. Together with Lawrence Mitchell and David Ham I implemented a solver for the mixed system in the performance portable Firedrake framework developed at Imperial College London. The code is written in python and computationally critical subroutines are implemented as automatically generated C kernels which are executed over the grid by using PyOP2 As demonstrated in [Mitchell, Müller (2016)], the performance of the bespoke geometric multigrid preconditioner is comparable to the BoomerAMG algorithm.

Matrix free and massively parallel Multi-GPU implementation of iterative solvers

Languages: C++ and CUDA-C

I designed and implemented two parallel matrix-free solvers for strongly anisotropic PDEs on GPUs. A particular challenge for memory-bound codes such as sparse linear solvers is the low bandwidth-to-FLOP ratio on novel chip architectures. To overcome this issue I developed a matrix-free algorithm to reduce global memory loads as much as possible; cache reusage was optimised by using loop-fusion. On one GPU the code is parallelised by vectorisation over the vertical columns of the horizontal grid. To optimise inter-GPU communications I based the halo-exchange operation on existing and well tested components. For this I used the Generic Communication Library (GCL, Mauro Bianco et al.) and wrote an abstraction for the easy and reliable launch of my compute kernels across multiple GPUs. I demonstrated the excellent weak scaling of the code on up to 16384 GPUs (see [Müller et al. (2013b)] and [Müller, Scheichl, Vainikko (2015)])

Massively parallel Tensor product multigrid

Language: Fortran 90

Geometric multigrid algorithms are known to be superior to AMG code, but need to be tailored to the particular problem which has to be solved. Existing general solver packages usually can not deliver this performance as they are not optimised for specific problems. To deal with the strong vertical anisotropy in atmospheric models, I implemented a massively parallel tensor-product multigrid algorithm based on horizontal coarsening and vertical line relaxation. Writing the algorithm from scratch allowed full control of the individual components and optimisation of the most critical kernels, such as the relaxation step; overlapping of calculation and communication could also be achieved very easily. Since my code is based on a matrix-free implementation tailored to this particular problem, I was able to beat the more general AMG solvers in the DUNE and hypre libraries. I demonstrated the scalability of the code to up to 65536 CPUs in [Müller, Scheichl (2014)].

DUNE implementation of a tensor product multigrid solver on spherical geometries

Language: Advanced C++

To extend the algorithm to more general spherical geometries based on semi-structured horizontal grids, I worked with Dr. Andreas Dedner (Warwick) and implemented the tensor-product geometric multigrid algorithm in the Distributed and Unified Numerics Environment (DUNE). DUNE is a framework for solving PDEs on grid based methods, performance is achieved by advanced C++ generic metaprogramming techniques. We designed a set of C++ classes for representing tensor-product grid functions and solver objects and then isolated the computationally most expensive kernels. By looping over the structured vertical direction in the innermost loops, the cost of indirect addressing in the horizontal direction can be hidden. As a result the solver shows performance which is similar to the one of the bespoke Fortran solver on fully structured grids, it has been tested on a set of realistic model problems and I showed that it scales to up to 16384 CPUs in [Dedner, Müller, Scheichl (2016)].

Flexible Multilevel Monte Carlo integrator

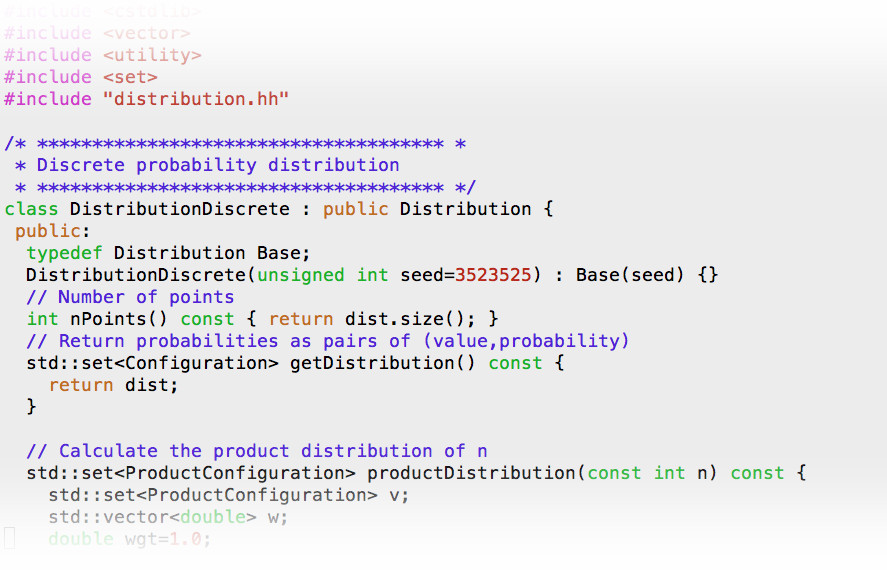

Language: C++

Advanced numerical time stepping methods for stochastic differential equations (SDEs) can improve the stability and performance of multilevel Monte Carlo algorithms. The goal of this project was to investigate the performance of different numerical time stepping schemes for Langevin equations with a set of model potentials (see [Müller, Scheichl, Shardlow (2014)]). To systematically compare the speed of these methods requires their careful and correct implementation. Since the multilevel algorithm is relatively complex, it is important to be able to easy switch between different time stepping algorithms in the code without compromising its correctness. For this I abstracted the most crucial components, such as the time stepping method, random number distribution and Langevin potential into a set of individual classes which are passed to the algorithms as template argumements. This allowed the fast and reliable testing of different methods which was crucial for comparing their numerical performance in a unified framework, see for example the results in [Katsiolides et al. (2016)].

Contributions to industrial codes

Met Office next generation model "LFRic"

Language: Fortran 2003

I develop and implement solvers and preconditioners for the Met Office next generation forecast model (codenamed LFRic), which is set to replace the Unified Model as the primary operational weather- and climate-prediction code post 2020. In particular I designed suitable data structures for the treatment of the strong vertical anisotropy in the used mimetic finite element discretisation. The ultimate goal is the implementation of a tensor-product geometric multigrid solver.

NAME Atmospheric Dispersion Model (link on Met Office webpage)

Language: Fortran 90

The NAME model is a world-leading numerical tool for predicting the spread and dispersion of atmospheric pollutants such as radioactive particles or volcanic ash. It is used by the Met Office both for research applications and in emergency response scenarios such as the 2010 eruption of the Eyjafjallajökull volcano. Because of the wide range of applications the code is very flexible and has to deliver highly reliable results; performance is crucial, in particular for operational use. As an atmospheric dispersion scientist at the Met Office I was reponsible for the parallelisation of the model in the OpenMP framework. This included the implementation of the parallelisation and careful testing to ensure the correctness and quantify speedups. Overall my work led to a tenfold increase in speed, and the new parallel capability was used in the emergency response for the subsequent eruption of the Grímsvötn volcano in 2011.

Met Office Unified Model (link on Met Office webpage)

Language: Fortran 90

The Unified Model is the Met Office's main tool for numerical weather and climate prediction. Speed is important in particular if the model is run operationally to produce daily weather forecasts. If an implicit time stepping method is used, an elliptic PDE for the pressure correction has to be solved in every model time step. To achieve optimal performance, the algorithmically best method should be used. For this I implemented a geometric tensor-product multigrid solver and tested its performance on up to 3,000 cores. Since the solver is integrated into the model I based it on existing data structures and adhered to coding standards. Compared to a BiCGStab solver, my multigrid implementation shows superior performance and is currently tested for operational release.

© Eike Müller, University of Bath. Last updated: Mon 28 Dec 2020 11:51:56h