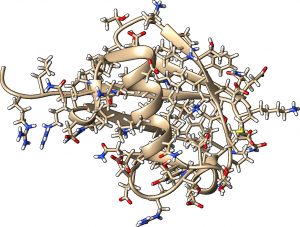

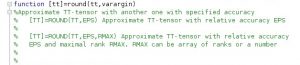

The tensor product methodology is one of data compression approaches in mathematical modelling, based mainly on linear algebra. Tensor decompositions offer potentially a significant reduction of the computational burden…sometimes cracking problems with 10100 or more unknowns that seem otherwise unsolvable. However, as many data-driven techniques, tensor methods are only good if the application under consideration allows that. We found positive examples in uncertainty quantification [21,30,12,6] and statistics [4,7,10,16], stochastic dynamical systems (Fokker-Planck [39,37], and master [22,29,31,5] equations), as well as optimal control of those [14,8], quantum modelling (Schroedinger [33,32] and Liouville-von Neumann [36] equations). Surely, the numerical efficiency depends on all components of the scheme. Sometimes we also needed to develop a better preconditioner [42,27,20]. The tensor product formalism is also applicable to some nonlinear problems [15,11,35,20]. An explicit reduction of the computing burden is achieved with parallel tensor algorithms [17,19].

More details below are structured in the following categories

Funded projects

- 2020-2023: EPSRC New Horizons Overcoming the curse of dimensionality in dynamic programming by tensor decompositions (with Dante Kalise)

- 2020-2025: EPSRC New Investigator Award Tensor decomposition sampling algorithms for Bayesian inverse problems