-

- I am a co-developer of TT-Toolbox: an open-source object-oriented MATLAB/C/Fortran package for computations in the Tensor Train and QTT-Tucker tensor product formats.

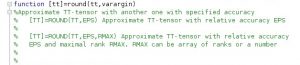

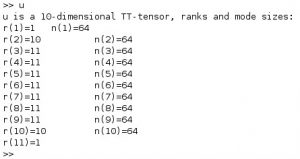

Main features include:- Overloaded linear algebra with matrices and vectors in a tensor format.

- Compression of given data into a format by direct (SVD) and sparse (cross) procedures.

- A collection of examples with analytical tensor formats (finite difference Laplacian, etc.)

- Alternating iterative algorithms for solution of linear systems and eigenvalue problems.

- I am a co-developer of TT-Toolbox: an open-source object-oriented MATLAB/C/Fortran package for computations in the Tensor Train and QTT-Tucker tensor product formats.

-

- tAMEn: purely Matlab routines for alternating iterative solution of the ordinary differential equations in the tensor train format.

See “A tensor decomposition algorithm for large ODEs with conservation laws”, [CMAM-2018-0023] or [arXiv:1403.8085].

The main feature of the new algorithm is the spectral accuracy in time and the possibility to conserve linear invariants and the second norm up to the machine (not tensor approximation) precision.

Another difference with TT-Toolbox is the storage scheme, which allows sparse tensor product factors.New: adaptive time discretisation.

- tAMEn: purely Matlab routines for alternating iterative solution of the ordinary differential equations in the tensor train format.

-

- ALS-Cross algorithm: First try of a Tensor Train Uncertainty Quantification toolkit, equipping the corresponding paper [10].

A combination of the ALS and TT Cross algorithms, together with high-level drivers for solving stochastic and parametric PDEs (a.k.a. high-dimensional linear systems with block-diagonal matrices).

- ALS-Cross algorithm: First try of a Tensor Train Uncertainty Quantification toolkit, equipping the corresponding paper [10].

-

- TT-IRT: MCMC and QMC samplers based on the inverse Rosenblatt transformation (IRT) via a TT approximation of a probability density function [5].

The IRT allows to produce independence samples from a TT format of the target density. For accurate TT approximations this allows to use these samples directly to integrate a quantity of interest that might not allow a TT decomposition. Moreover, the IRT samples can be used as effective independence proposals in MCMC or Importance Weighting even if the TT surrogate is inaccurate. This allows to balance computing costs between the TT approximation and sampling stages.

NEW: Deep Inverse Rosenblatt Transformation driven by Tensor Trains [1]. A sequence of IRTs built from tempered functions can be seen as a generator of a high-dimensional mesh that casts functions of similar “shape” (e.g. the original function tempered with a different power) closer towards a tensor product function.

- TT-IRT: MCMC and QMC samplers based on the inverse Rosenblatt transformation (IRT) via a TT approximation of a probability density function [5].